TEDxLjubljana

The bottleneck of human-computer interaction

Radar sensing

University of Primorska

Using radar-on-chip sensors such as Google Soli and machine learning algorithms we explore the world of microgestures. The goal is to build systems that can: (i) sense hand and finger gestures in order to enable inconspicuous, precise and flexible object oriented interactions with everyday objects; (ii) enable sensing even in situations where the sensor is occluded by materials (e.g. phone in pocket or a leather bag); and (iii) perform sensing with low energy consumption. More about the porject: https://solidsonsoli.famnit.upr.si/home

Publications:

Čopič Pucihar, K., Attygalle, N. T., Kljun, M., Sandor, C., & Leiva, L. A. "Solids on Soli: Millimetre-Wave Radar Sensing through Materials." PACM HCI, EICS 2022. (HONORABLE MENTION)

Čopič Pucihar, K., Sandor, C., Kljun, M., Huerst, W., Plopski, A., Taketomi, T., ... & Leiva, L. A. (2019, May). The missing interface: micro-gestures on augmented objects. CHI 2019

Leiva, L. A., Kljun, M., Sandor, C., & Čopič Pucihar, K. (2020, Oct). The Wearable Radar: Sensing Gestures Through Fabrics. MobileHCI 2020

Paper interfaces

University of Primorska

We explore how to augment/modify paper by modification of optical properties of paper (e.g. through application of inks or paper perforation) and manipulation of light (e.g. generated by tablet computers underneath the paper). We show how to modify the appearance of printed content and create the illusion of making static objects perceptually dynamic. We also explore how to integrate multiple layers of information into paper in order to enable novel paper based interactions. More about the project: https://hicup.famnit.upr.si/index.php/2021/11/05/paper-interfaces/

Publications:

Campos C., Kljun M., and Čopič Pucihar K. 2022. Dynamic Pinhole Paper: Interacting with Horizontal Displays through Perforated Paper. Proc. PACM HCI, ACM ISS, https://doi.org/10.1145/3567720

Campos C., Kljun M., Sandak J., and Čopič Pucihar K. 2022. LightMeUp: Back-print Illumination Paper Display with Multi-stable Visuals. PACM HCI, ACM ISS, https://doi.org/10.1145/3570333

Campos, C.; Sandak, J.; Kljun, M.; Čopič Pucihar, K. The Hybrid Stylus: A Multi-Surface Active Stylus for Interacting with and Handwriting on Paper, Tabletop Display or Both. Sensors 2022, https://doi.org/10.3390/s22187058

Campos C., Čopič Pucihar K., Kljun M. (2020, Oct) Pinhole Paper: Making Static Objects Perceptually Dynamic with Rear Projection on Perforated Paper. MobileHCI 2020

AR supported language learning

University of Primorska

Experiential learning (ExL) is the process of learning through experience or more specifically “learning through reflection on doing”. In our work, we propose a series of applications that simulate these experiences, in Augmented Reality (AR), addressing the problem of language learning and specifically vocabulary learning.More about the porject: https://hicup.famnit.upr.si/index.php/2022/10/11/arlanglearn/

Publications:

Weerasinghe, M., Biener, V., Grubert, J., Quigley, A., Toniolo, A., Čopič Pucihar, K. , & Kljun, M. (2022). VocabulARy: Learning Vocabulary in AR Supported by Keyword Visualisations. IEEE Transactions on Visualization and Computer Graphics. https://doi.org/10.1109/TVCG.2022.3203116

Weerasinghe, M., Quigley, A., Čopič Pucihar, K., Toniolo, A., Miguel, A., & Kljun, M. (2022). Arigatō: Effects of Adaptive Guidance On Engagement and Performance in Augmented Reality Learning Environments. IEEE Transactions on Visualization and Computer Graphics. https://doi.org/10.1109/TVCG.2022.3203088

High-precision micro instructions in AR

University of Primorska

Handheld AR systems can be used as support tools for performing high precision micro tasks such as for example circuit board debugging. Here we look at how system latency affects users performance.System latency in a video-see through device results in delay between the action in the physical world and the image output on the display. This opens up two research questions: (i) What is the minimal latency users notice in high precision micro tasks?, and (ii.) How latency affects time and accuracy.

Publications:

Fuvattanasilp, V., Kljun, M., Kato, H., & Čopič Pucihar, K. (2020, Oct) The Effect of Latency on High Precision Micro Instructions in Mobile AR. MobileHCI 2020

Interactive Documetaries

University of Primorska

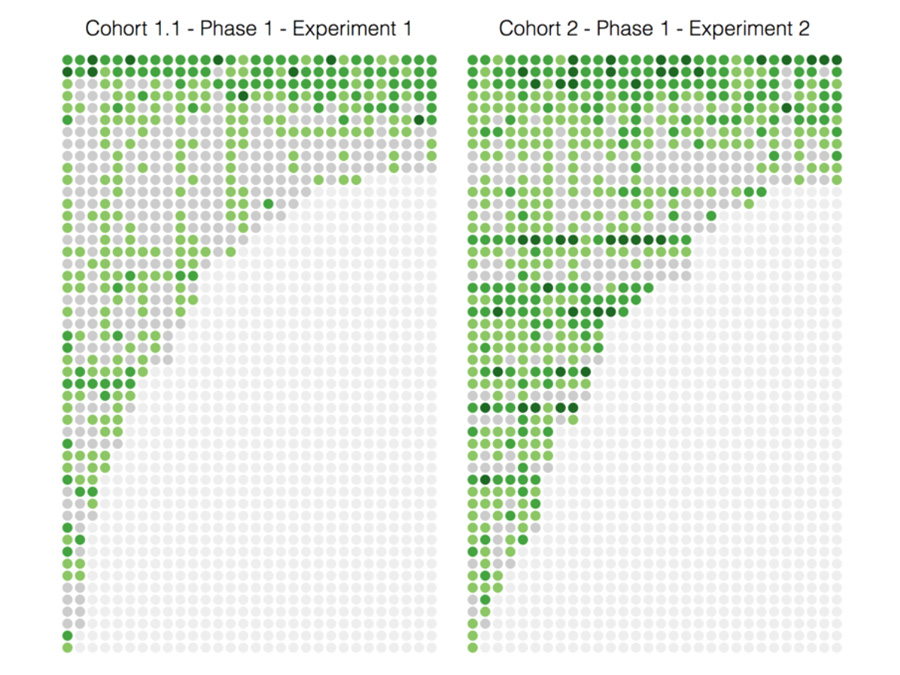

Interactive web documentaries have the potential to engage people with important topics through innovative storytelling approaches. Understanding how users interact with web documentaries and to what extent they consume their content can help documentary filmmakers reach a broader or more engaged audience. This project tries to contribute to this gap in knowledge by analysing a serialized web documentary called iOtok (which we also helped build in collaboration with CORA and ASTRAL FILMS). The 13 episodes of iOtok were launched on an online portal showcasing a variety of features (chat, souvenirs, animated 360 panoramas, etc.), on a weekly basis. We collected data from 20 000 sessions, 12 200 videos watched, newsletter engagement and one online questionnaire all collected over a period of 1 year. We conduct analysis utilising advanced visualization and computational techniques that try to leverage on standard observational techniques such as manual coding by multiple researchers in order to provide insight about the impact of serialization and interactivity on audience reception, user engagement and viewing behaviour.

Publications:

Ducasse, J., Kljun, M., & Čopič Pucihar, K. (2020). Interactive Web Documentaries: A Case Study of Audience Reception and User Engagement on iOtok. International Journal of Human–Computer Interaction.

Adaptive m-learning systems

University of Primorska

Mobile lear allows users to tailor their professional training and education to their needs and time constraints. However, in self-paced education, it is very hard to keep user retention and engagement. In this project we try to contribute to this gap knowlidge by designed and developed an m-learning platform for corporate environments based on adaptive triggering that try to incite users in regularly using the platform. We have evaluated the application in-the-wild in corporate environments of differently sized companies with 300 users.

Publications:

Kljun, M., Krulec R., Čopič Pucihar, K., & Solina, F. (2019). Persuasive technologies in m-learning for training professionals: how to keep learners engaged with adaptive triggering. IEEE Transactions on Learning Technologies, 12(3), 370-383.

Digital Augmentation of Comic Books

University of Primorska

Digital-augmentation of print-media can provide contextually relevant audio, visual, or haptic content to supplement the static text and images. The design of such augmentation can have a significant impact on the reading experience. Here we explore how to best design for this new medium.

Publications:

Kljun, M., Čopič Pucihar, K., Alexander, J., Weerasinghe, M., Campos, C., Ducasse, J., ... & Čelar, M. (2019, May). Augmentation not duplication: considerations for the design of digitally-augmented comic books. CHI 2019.

Augemnted Reality as a Crafting Tool

University of Primorska

In this project we explore how Augmented Reality can be utilized as a crafting tool for building real world objects. In this early work we explore how novice users can be supported in creating physical sketches through virtual tracing, i.e. creating a physical sketch on paper given a virtual image on the handheld device. In order to address the challenge of pose tracking while mitigating the effects

on virtual tracing we utilize Dual Camera Magic Lens where we use the front facing camera for tracking while the back camera concurrently

provides the view of the scene.

Publications:

Čopič Pucihar, K., Grubert, J. & Kljun, M., (2015, September). Dual Camera Magic Lens for Handheld AR Sketching. INTERACT' 15, LNCS

Dual-view and the Magic Lens: User vs. Device-perspective Rendering

Lancaster University

The magic lens paradigm, a commonly used descriptor for handheld Augmented Reality (AR), presents the user with dual views: the augmented view (magic lens) that appears on the device, and the real view of the surroundings (what the user can see around the perimeter of the device). Unfortunately, in common AR implementations these two do not match. In this project we uncover how this mismatch affects the users.

Publications:

Čopič Pucihar, K., Coulton, P., & Alexander, J. (2013, December). Evaluating dual-view perceptual issues in handheld augmented reality: device vs. user perspective rendering. In Proceedings of the 15th ACM on International conference on multimodal interaction (pp. 381-388). ACM.

Čopič Pucihar, K., Coulton, P., & Alexander, J. (2014, April). The use of surrounding visual context in handheld AR: device vs. user perspective rendering. In Proceedings of the 32nd annual ACM conference on Human factors in computing systems (pp. 197-206). ACM.

Hybrid Magic Lens

Lancaster University

Previous studies identified shortcomings of the magic lens interaction paradigm that range from poor information browsing task performance to undesired effect of the dual-view problem degrading user’s ability to relate augmented content to the real world and users’ ability to use the existing contextual information. In this project we explore how a hybrid magic lens approach could improve such interaction. In other words, how designing a magic lens with more than one view can benefit the user.

Publications:

Čopič Pucihar, K. , & Coulton, P. (2014, September). Utilizing contact-view as an augmented reality authoring method for printed document annotation. In Mixed and Augmented Reality (ISMAR), 2014 IEEE International Symposium on (pp. 299-300). IEEE.

Čopič Pucihar, K., & Coulton, P. (2014, September). Contact-view: A magic-lens paradigm designed to solve the dual-view problem. In Mixed and Augmented Reality (ISMAR), 2014 IEEE International Symposium on (pp. 297-298). IEEE.

Dual-view and the Magic Lens: User vs. Device-perspective Rendering

Lancaster University

The magic lens paradigm, a commonly used descriptor for handheld Augmented Reality (AR), presents the user with dual views: the augmented view (magic lens) that appears on the device, and the real view of the surroundings (what the user can see around the perimeter of the device). Unfortunately, in common AR implementations these two do not match. In this project we uncover how this mismatch affects the users.

Publications:

Čopič Pucihar, K., Coulton, P., & Alexander, J. (2013, December). Evaluating dual-view perceptual issues in handheld augmented reality: device vs. user perspective rendering. In Proceedings of the 15th ACM on International conference on multimodal interaction (pp. 381-388). ACM.

Čopič Pucihar, K., Coulton, P., & Alexander, J. (2014, April). The use of surrounding visual context in handheld AR: device vs. user perspective rendering. In Proceedings of the 32nd annual ACM conference on Human factors in computing systems (pp. 197-206). ACM.

Enhanced virtual transparency

Lancaster University

In this project we looked at different ways of imporving virtual transparency of the Magic Lens. We looked at how to improve depth perception when looking through the magic lens utilizing paralax berrier stereoscopic displays and how to improve scene readability utilizing high resolution textures.

Publications:

Čopič Pucihar, Klen, and Paul Coulton. "Enhanced virtual transparency in handheld ar: digital magnifying glass." Proceedings of the 15th international conference on Human-computer interaction with mobile devices and services. ACM, 2013.

Čopič Pucihar, Klen, Paul Coulton, and Jason Alexander. "Creating a stereoscopic magic-lens to improve depth perception in handheld augmented reality." Proceedings of the 15th international conference on Human-computer interaction with mobile devices and services. ACM, 2013.

Sensor fusion for imporved augmentation context

Lancaster University

In this project we looked at different ways of imporving the context of augmentation in handheld AR. We looked at the possiblity of introducing scale utilizing autofocusing capability of the camera phone and the depth-from-focus technique. We also looked at how one can simplify initalization of planar maps utilizing on device accleorometers.

Publications:

Čopič Pucihar, K., & Coulton, P. (2011, June). Estimating scale using depth from focus for mobile augmented reality. In Proceedings of the 3rd ACM SIGCHI symposium on Engineering interactive computing systems (pp. 253-258). ACM.

Čopič Pucihar, K., Coulton, P., & Hutchinson, D. (2011, August). Utilizing sensor fusion in markerless mobile augmented reality. In Proceedings of the 13th International Conference on Human Computer Interaction with Mobile Devices and Services (pp. 663-666). ACM.